Hadoop/SAP data-fix frameworks with Azkaban orchestration, GDPR-compliant cleansing, Hive/Spark, and BI monitoring.

Overview

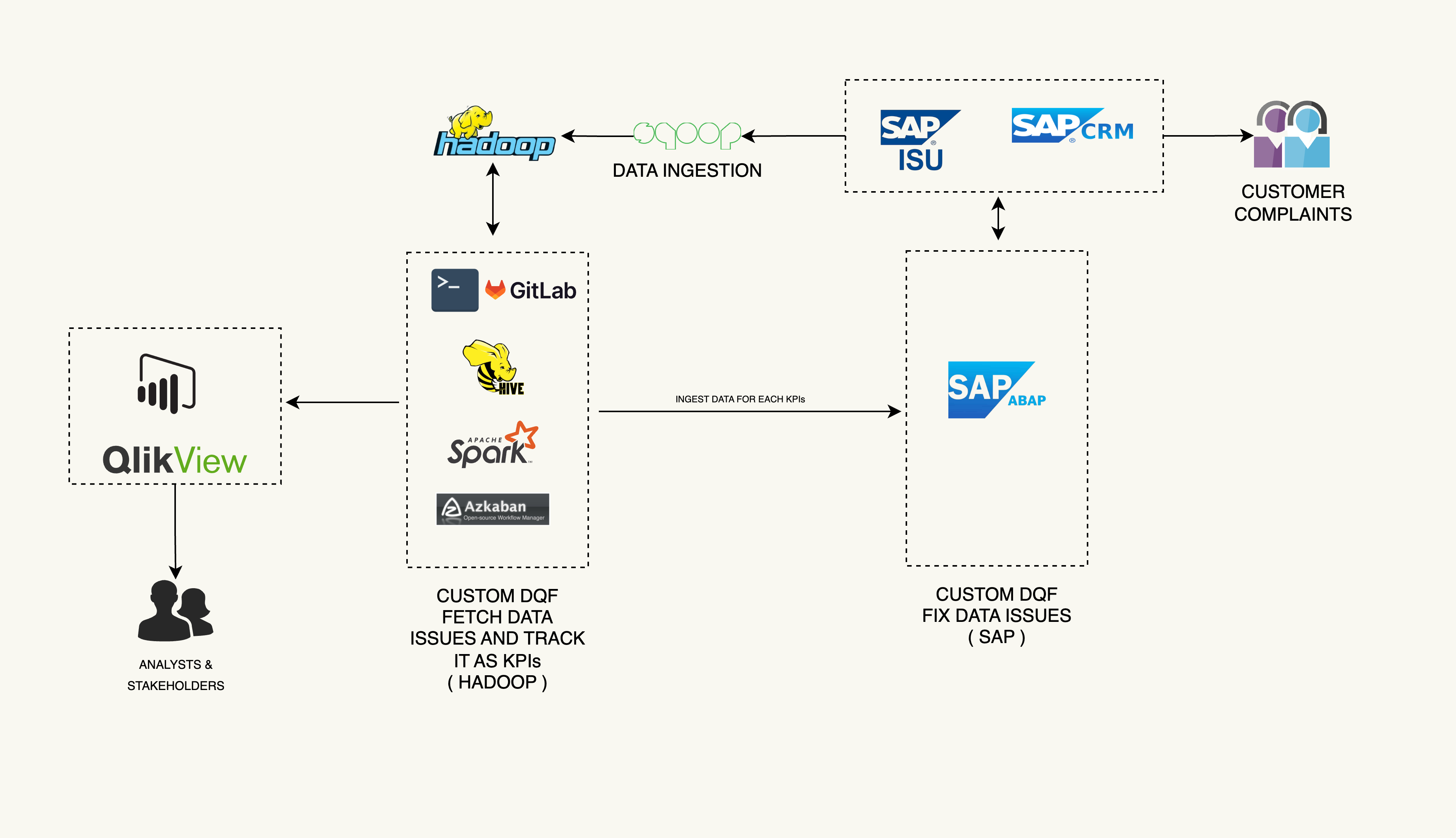

At Cognizant (April 2014 – June 2022), I developed automated Data-Fix frameworks across Hadoop and SAP, enforced governance with audit logs, and delivered clean datasets to downstream billing and CRM systems for British Gas UK (Centrica) and Philips Netherlands. The solution integrated Azkaban orchestration, GDPR-compliant cleansing, Hive/Spark transformations, AWS data-lake migration, and BI monitoring components.

End-to-End Data Correction Pipelines with Audit Logging

- Developed automated data-fix frameworks in Hadoop and SAP with Azkaban orchestration, embedding validation and audit logging. Delivered clean, corrected datasets for downstream billing and CRM systems.

- Extracted, corrected, and cleansed large-scale datasets using SQL, SAP ABAP, LSMW, and custom-built tools, ensuring GDPR compliance and significantly improving data quality while reducing customer complaints.

- Developed Hive tables, Sqoop processes, and Spark jobs to integrate enterprise data, accelerating reporting timelines by 35%.

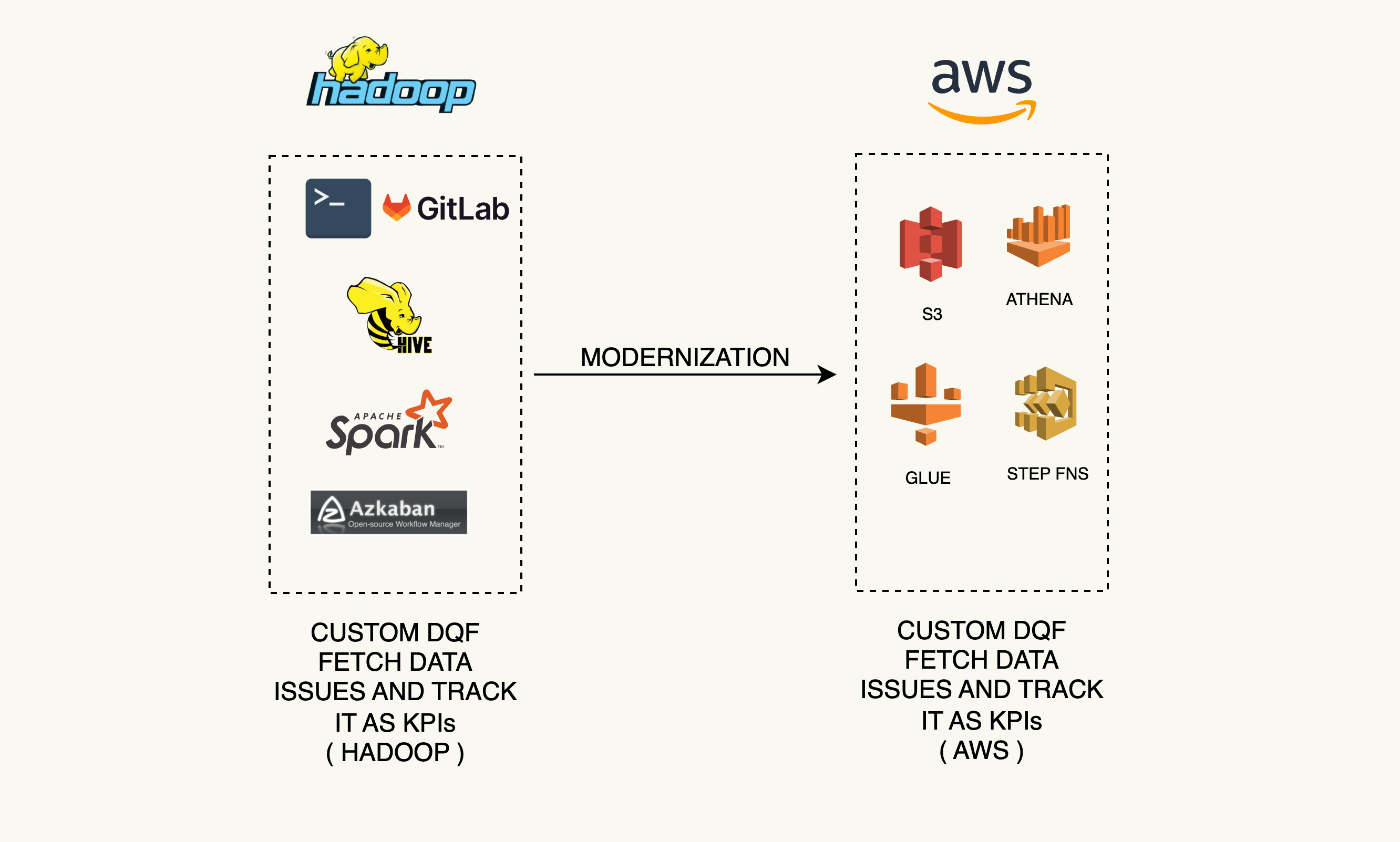

Modernized Legacy Pipelines to AWS with Monitoring & KPIs

- Built monitoring dashboards in Power BI and QlikView, enabling proactive detection of anomalies in production data pipelines.

- Implemented AWS-based ETL pipelines to track and fix systemic data issues, turning recurring errors into measurable KPIs and improving reliability across CRM systems.

Enterprise Data Integration & Reporting Solutions

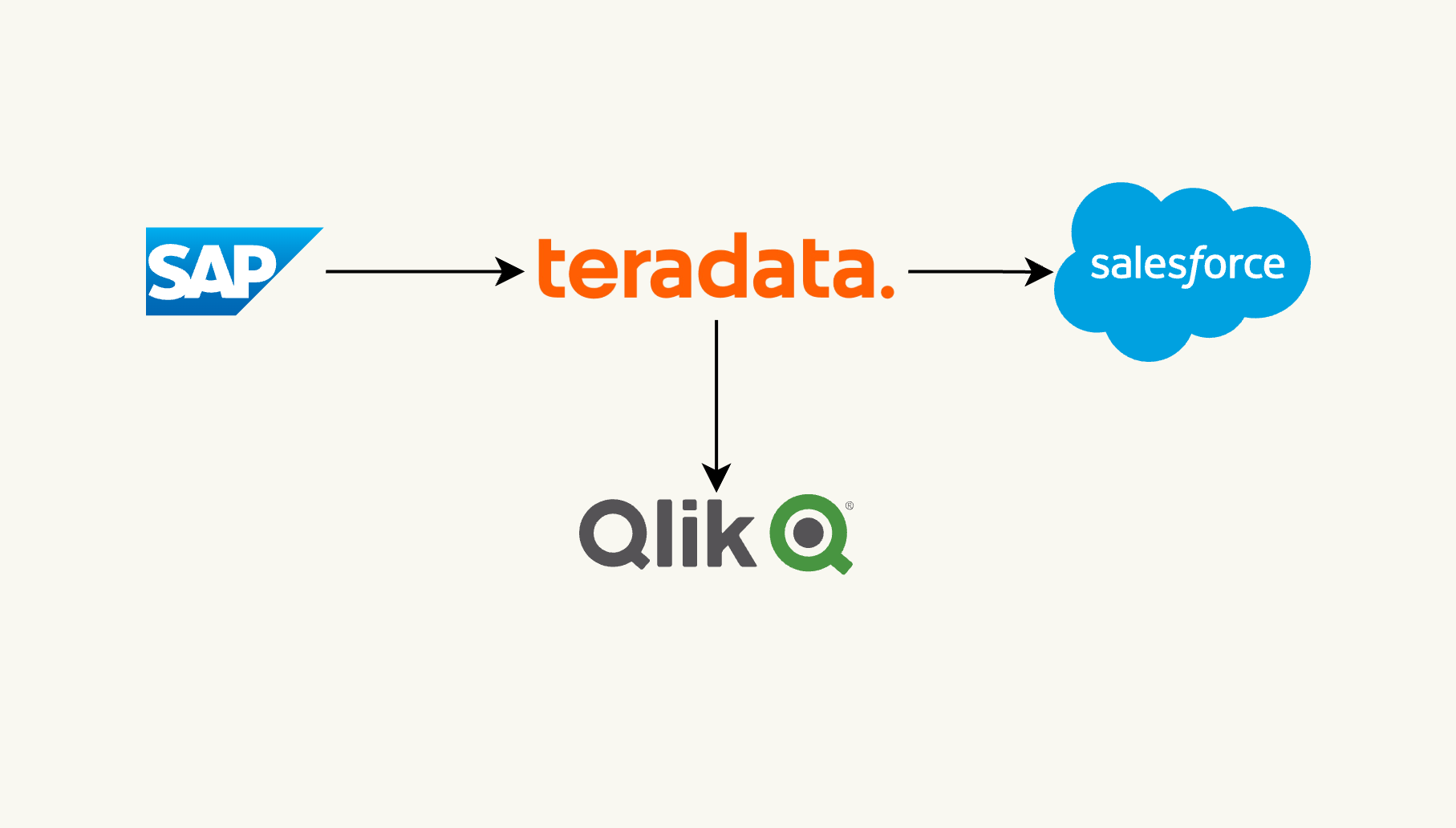

- Integrated SAP ECC, Teradata, and Salesforce, enabling unified analysis of customer, invoice, and revenue information.

- Built solutions to supply consistent and reliable data to Qlik Sense dashboards, improving financial and healthcare reporting accuracy